The Evolution of Trust:

Trust is our most valuable asset. And right now, it’s being taken apart like a cheap flat-screen from a Black Friday sale. In the past, the world was simple: people believed the evening news, the professor with dusty books, or the doctor with a clipboard and a furrowed brow. Today? A TikTok AI avatar with a convincingly serious voice explains the financial markets, while chatbots provide medical diagnoses — more reliably than some family doctors.

TRUST IS KEY. But where is the keychain?

The problem? Information and disinformation move at the same speed. What does that mean for our trust? Who decides what is true? And most importantly: Can we trust a machine that has neither morality nor real judgment but only simulates them? Sure, trust evolves.

The only question is — in which direction?

AI as an enlightener?

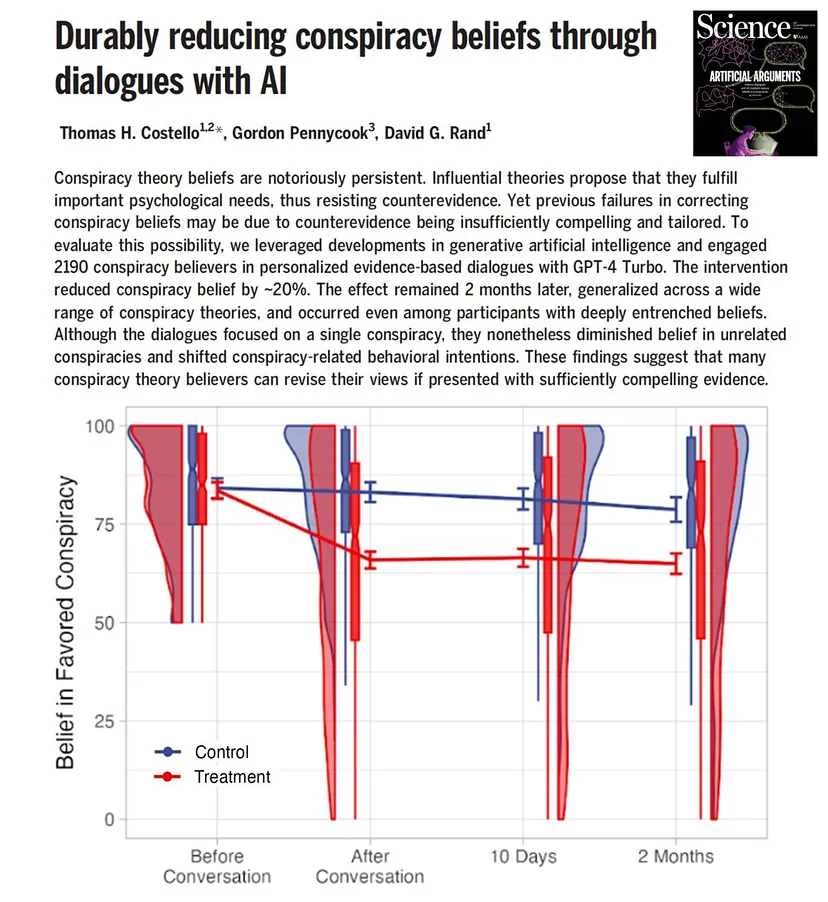

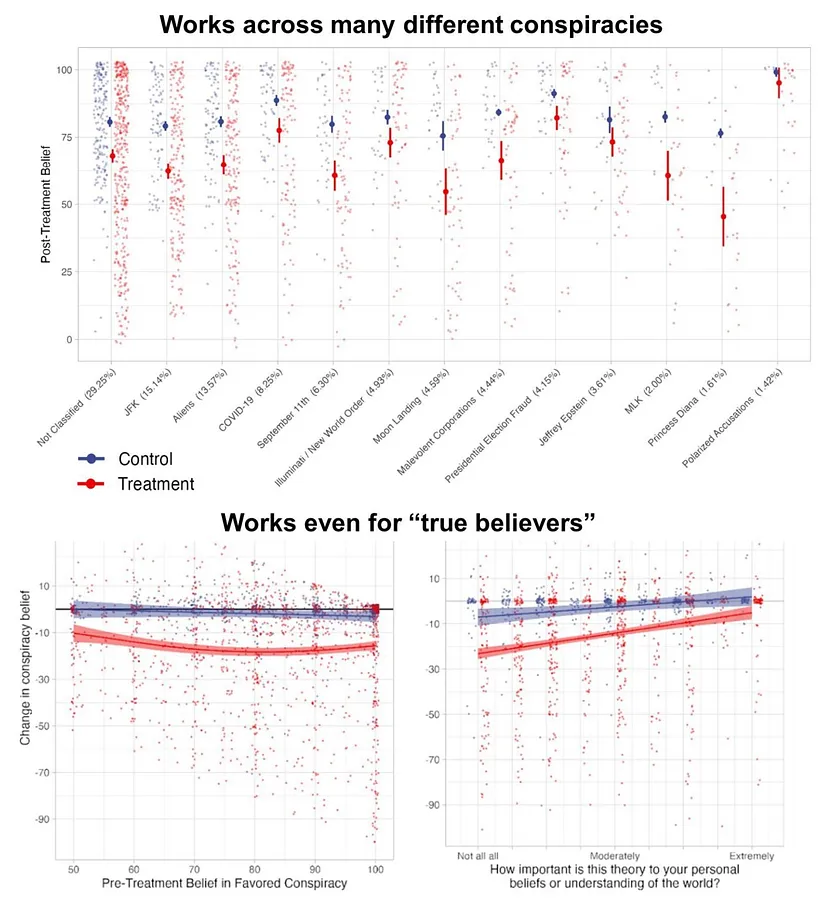

Two recent studies — one published in Science by Costello, Pennycook, and Rand, and a working paper by Goel et al. — provide initial scientific evidence that AI-based interventions can help steer people away from false beliefs.

- Costello et al.: In a study with 2,190 participants, personalized conversations with GPT-4 Turbo led to an average reduction in conspiracy beliefs by 20%. The effect lasted for over two months, indicating a lasting change in opinions. Particularly noteworthy: Even among individuals with strong conspiracy beliefs (“true believers”), a significant reduction was observed.

Impressive — and terrifying. If an AI chatbot can reduce conspiracy beliefs, it can just as easily amplify them. The effect lasts for two months? In the world of opinion shaping, that’s an eternity. The real question: Who controls the narrative? Because if algorithms can shape beliefs, then it’s no longer people in the driver’s seat, but machines — or those who program them..

- Goel et al.: In an experiment with 1,690 U.S. participants, researchers examined whether people trust AI-generated information more than human sources. The results showed that GPT-4 was similarly effective in correcting false beliefs as a presumed human from the sample — but less convincing than a recognized expert.

The studies suggest that AI can serve as a tool for enlightenment — but with limitations. Our trust in a source depends not only on factual accuracy but also on perceived credibility.

Simply put: AI can deliver facts, but it cannot force trust. People believe people — especially those with authority. GPT-4 is convincing, but it lacks the charisma of an expert.

The real question: What happens when machines learn to be not just accurate, but charismatic?

The Psychology of Trust

People trust faces, not lines of code. The fact that AI-driven conversations can keep up with human discussions is impressive — but there’s a limit. Studies show that we are more likely to believe someone with a doctorate and graying temples than an anonymous source, even if both say the same thing. Why? Because our brains are conditioned to respect authority.

Goel et al. confirm exactly this: An expert persuades, while AI informs. The difference? Trust.

And trust is not just a matter of logic — but of psychology.

Here lies the paradox: AI can provide objective, fact-based answers — yet people prefer to trust other people. Why? Because trust is not purely a rational process. It is emotional, social, and culturally embedded. Even if AI can demonstrably reduce belief in conspiracies, its influence may always be limited. Trust is not just built on accuracy but on connection. We want to see eyes, hear a voice, feel a story. The real challenge, then, is not just whether AI tells the truth — but whether we are willing to listen.

The Dark Side

While these findings suggest that AI can spread knowledge and reduce misconceptions, it also carries risks. As Costello et al. highlight, the success of AI interventions heavily depends on how people perceive AI. If AI is seen as a manipulative actor, it could actually reinforce distrust.

Moreover, it remains unclear how repeated exposure to AI interventions might impact trust in human expertise in the long run.

Trust is fragile. Once shaken, it’s hard to repair. And this is precisely where the risk of AI lies.

Yes, it can spread knowledge, combat misinformation, and shape beliefs. But what happens when people see it not as a helper, but as a manipulator? According to Costello et al., the success of AI interventions depends on how they are perceived. And perception is reality. If people feel that a machine is trying to reeducate them, distrust is inevitable.

What does this mean for our long-term trust in human expertise? If AI consistently corrects us, will we eventually believe less in professors, journalists, or doctors — because the algorithm supposedly “knows better”?

The irony: A technology that could strengthen our trust could just as easily undermine it.

Whether AI is seen as an enlightener or a manipulator is not decided by the machine — but by us.

Another problem is that AI can be used not only for enlightenment but also for disinformation. Deepfakes, automated fake news generators, and algorithmic biases demonstrate that the same technology used for education can also be exploited for manipulation.

This brings us to Gardner’s Razor:

“If a system requires perfect human behavior to function correctly, it is bound to fail.””

In this sense, firmly believing that AI will be used solely for good would not only be naive — it would be hyper-exponentially optimistic.

The human remains the decisive factor

The studies by Costello et al. and Goel et al. make one thing clear: AI can combat misinformation — but it won’t reprogram human psychology. Trust isn’t built on facts alone; it’s shaped by social dynamics. We trust people, not just data points. An expert with charisma will outperform an algorithm with perfection. Ever.

The real challenge? Integrating AI in a way that it is seen as an ally, not as Orwellian thought police. Because if people feel that machines are taking control, the biggest casualty won’t be misinformation — but trust itself.

If AI changes our perception of truth, it won’t be through mere information delivery — but through the way we socially contextualize it.

The real question is not just whether we trust machines more than humans, but how we redefine the mechanisms of trust in a technology-driven society.

What remains?

Trust is not a static concept but a living, evolving fabric of expectations, experiences, and social constructs. In a technology-driven world, the question is not just whether we trust machines more than humans, but rather:

How does the very nature of trust transform when algorithms make decisions for us, when transparency is replaced by complexity, and when mistakes are no longer human — but systemic?

Humans have always trusted out of necessity — first their senses, then tools, later institutions. Now, we face the next stage of evolution: trusting something that does not measure itself by our standards but develops its own logic and mechanisms.

Trust in a machine is not an act of blind faith but a calculation of reliability, transparency, and ethical programming. But can trust endure when it is no longer tied to responsibility?

The paradox: The more flawless the machine, the more unforgivable its mistake.

A human doctor is allowed to make mistakes; an AI is not — even though both operate within probabilities. The truth remains: A system that demands perfect behavior is destined to fail. That’s why the real question is not just how we trust machines, but how we redefine our tolerance for errors and unpredictability.

The challenge lies not only in technology but, above all, in our ability to shape trust in an age of opacity.

The question remains — In Bot We Trust?

Source: www.medium.com