You can throw the dice and have it come up 11 many times in a row. It’s not very probable but it is possible. It’s one of the harder concepts for people to understand. While there’s a feeling of normalcy, things tomorrow will be similar to the way they are today, that is an illusion. Repetition creates a pattern and a context we start to rely upon. We build a mental model of the world and how it works. As we’re each building our models over time, sharing and cooperation are sometimes at odds with personal concepts. Compromise and loss are as necessary as wins and achievements. Over time, things can settle into one pattern or another but we all take about the same amount of effort to make sense of the day-to-day. Its more of a cycle of ups and downs rather than some upward curve.

In our recent discussion, Jack and I discussed the weather. It’s 16 degrees in Chicago. While in Portugal it’s also 16 degrees. One is bitterly cold, the other is sunny and warm. Farenheight vs Centigrade. It’s funny how our communication of something as concrete as temperature is entirely relative if the numbers are all you look at. This, I realized, was a perfect metaphor for how we train AI: it deals in absolutes, numbers, and data points, but does it truly understand context? Or is it just good at mimicking understanding?

It may seem inconsequential, but I was doing a similar exercise in choosing food at the store, frustrated by reading the label and trying to unpack the nutrition percentages. I just didn’t have a real understanding of what 100 grams were. I can ‘sense’ a pound (an orange), or 5 pounds (a bag of flour). I imagine inches (finger sections), I know a mile or 10 when planning a bike ride. Even if others are imagining the same with a kilo or kilometer. The only difference is if they go a little more or less, their math is much easier than mine. Yet both are in agreement that there’s a way to describe critical concepts of distance, temperature, and mass. I found Australian Dollars to be confusingly mislabeled. They have no relationship to my concept of a dollar, so it was more of a struggle to understand than the Swedish Kronor, which I also have no concept of what the number represents. Yen and Euros play a similar role, it takes much more effort to understand the basis for these simple measures. Similar games are played with the stock market or anything that tries to numerically represent value, a qualitative concept.

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2FhaNAdU0iXQc%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fwatch%3Fv%3DhaNAdU0iXQc&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2FhaNAdU0iXQc%2Fhqdefault.jpg&type=text%2Fhtml&schema=youtubeWe were being productive in our discussion, but could it be measured? It’s about 50 minutes…

Measuring productivity

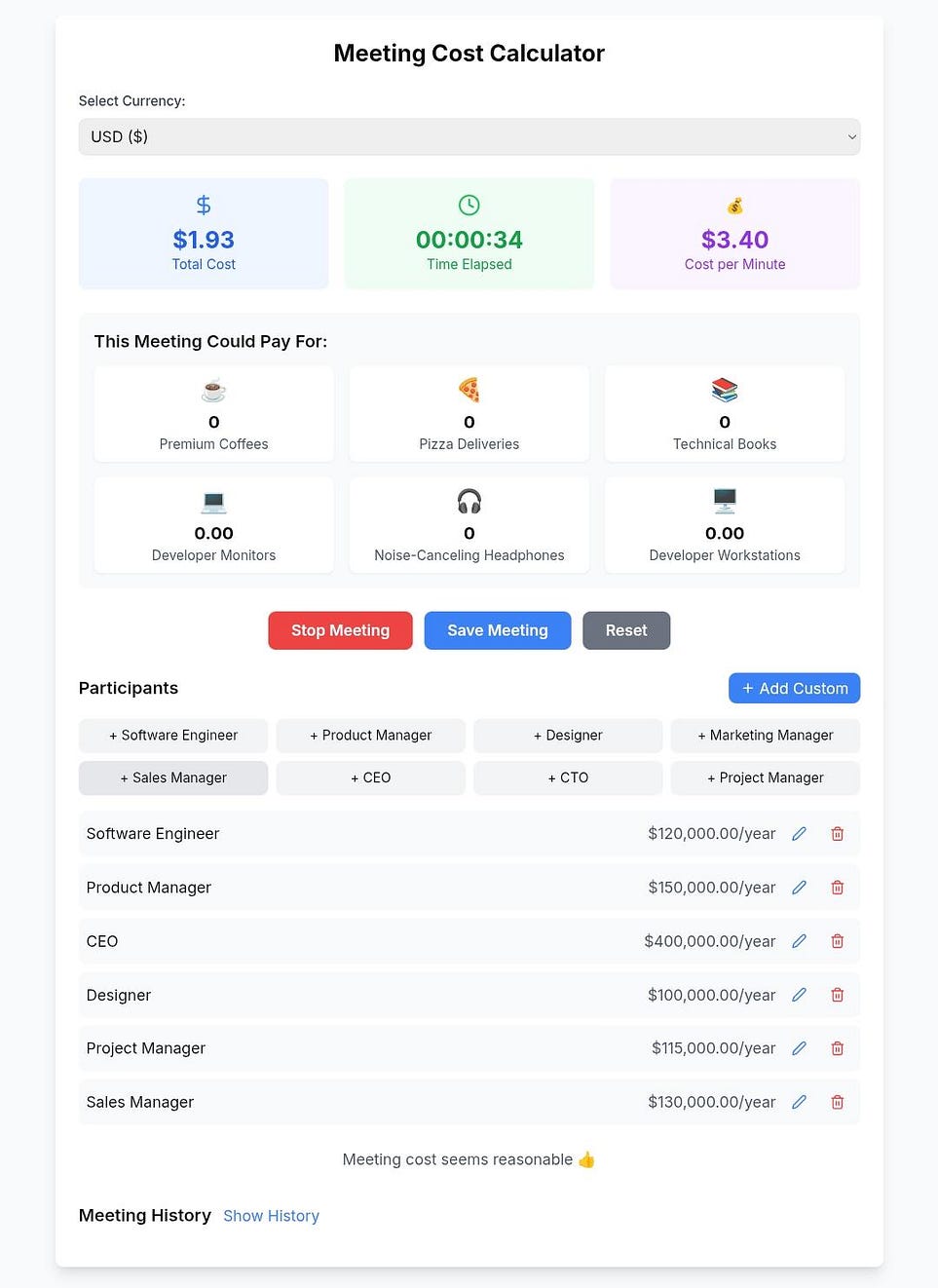

Microsoft’s CEO Satya Nadella recently admitted that AI so far has had no measurable impact on productivity. “The real benchmark is: the world growing at 10 percent,” he added. “Suddenly productivity goes up and the economy is growing at a faster rate. When that happens, we’ll be fine as an industry.” That’s quite a statement considering AI tools are being woven into everything from software development to customer service. In a way similar to my misunderstanding of grams and millimeters, what is the measure of productivity? What does that even mean? If growth is just a measure of money, is that productivity?

Maybe it’s the assembly-line mindset — where productivity is measured by how efficiently we produce something. But does a 20% boost in efficiency mean the work is better? Goodhart’s Law (when a measure becomes a target, it ceases to be useful) and the Soviet nail production fiasco remind us why raw numbers don’t always tell the full story.

An AI-generated image may look polished, but does it convey something in a more relevant way? Is it effective? AI doesn’t get tired, doesn’t procrastinate, doesn’t make coffee runs — but does that make it more productive? Or just more relentless?

Productivity and the Great Replacement Fear

For many workers, AI isn’t just a tool — it’s becoming a silent observer of their work. It’s supposed to assist, but at what point does it start making the decisions?

We should be asking: will AI be our tool, our coach, or our boss? Like us, it is trainable, if the realm is entirely in the digital world, it’s pretty good at building skills that are hard for us to mimic. The “co-pilot” model, as Microsoft likes to call it, suggests AI is here to assist. These can be seen over time as trends can be good or bad. Training AI on how to do your job seems useful now, but where will you both be in a year?

Trends are a shorthand for outcomes. We change the economics to choose personal, at-will ways to move around over public transportation. That outcome results in more roads, more cars, and more time, energy, and money spent getting from place to place. The tradeoffs may not have been foreseen when the mindest was the growth of suburbs and the auto industry. We build this limitation into our mental models, yet they were once just assisting with some critical function like trucks to deliver goods and services vs trains.

Take programmers: many now use AI to generate documentation, summarize meetings, and even write basic code. At what point does AI become the coder? Truckers have long been the poster children for automation fears. Still, the reality is more complex: the hard part of trucking isn’t driving — it’s the logistics, the waiting in detention to drop off a load, and the human negotiation of getting the goods in and out safely. AI doesn’t address any of those problems that eat up time and don’t generate any income. They are similar to sitting and thinking over a problem. It may generate nothing, yet it’s essential to understanding and will inform the outcome. Performative action may seem productive, moving things around, making meetings, and updating spreadsheets. But if it doesn’t result in better outcomes, it’s busy work. If businesses shifted to focus on innovation vs optimization it could reflect the work in universities that put less value on the exploitation and refinement of ideas than exploration. It’s Fahrenheit vs. Celsius. What are we trying to describe, is the number even meaningful?

The Big AI Myth: What’s the Plan?

AI companies love to talk about AGI — artificial general intelligence — as if it’s right around the corner. But what’s the actual plan? If AI can fetch you tea (as OpenAI recently demonstrated), is that meaningful progress, or just a costly parlor trick? Hint, it’s a trick. And while I do love magic shows, this one lacks the awe factor that comes from a good trick. It seems to step into the uncanny valley, where you wonder why we can’t tell a story with a new actor, rather than de-age our parent’s movie stars.

Military AI is another frontier. Palantir and the Department of Defense are working on AI-driven decision-making for warfare. That’s not AGI, but it is based upon this nebulous yet ‘measurable’ concept of a powerful model. Yet, AI’s limitations remain: it can recognize patterns, optimize logistics, and maybe even assist with battle strategy — but does it understand the consequences of war? No. Without rehashing Asimov’s fictional concept of robots protecting humans, there is no such thing as a program that could grasp what it is to be human. The real danger isn’t AGI — it’s the unchecked decisions we let AI make in the name of efficiency. Whether in war or the workplace, AI optimizes without understanding consequences.

AI as a Coach vs. AI as a Boss

This brings us back to productivity. The real conversation isn’t about AI taking jobs — it’s about AI reshaping power structures. What if AI wasn’t replacing workers, but replacing bosses? While it says more about how the relationship, 94% of 500 CEO’s said that AI gives better advice than the board. Also that most CEO’s are operformed by this kind of automation, claims recent work by University of Cambridge.

Managers today make hiring decisions, performance evaluations, and project assignments based on a mix of experience, intuition, and bias. AI could, in theory, remove that bias, make purely qualitative data-driven decisions, and optimize workflows. In my work in advertising, we pitched the concept of 3, we could come up with dozens of ideas, but to present to clients, we tended to have 3. Inevitably the client liked bits of each, so the final work reflected a strange creative average of whatever the team came up with. GenAI tools manage this in an instant. They are trained on this, what would be interesting is to have it do all this hard iteration while letting the workers build the resonances, the ideas that have depth or connect with the zeitgeist of the company or culture. That, I hope is where workers, coders, writers, accountants, and scientists are incorporating GPT into their processes. Yet it’s not for productivity, it’s to have more time to understand the impact of their work.

But that’s also the problem these tools don’t understand office politics, personal growth, or human motivation. In the role of boss or supervisor it might tell you that, based on the data, you’re underperforming — but does it understand why? Would you trust an AI boss who never takes a sick day, never considers emotions, and never changes their mind?

Still, AI as a coach — an ever-present mentor that helps you improve, guides your work, and offers real-time feedback — feels much more useful than AI as an enforcer of corporate KPIs. Is this the co-pilot or (to quote strategy professor Richard Rumelt) the ability to identify one or two critical issues in the situation and see how those pivot points multiply the effectiveness of effort and help you focus action and resources on them? Personally, focusing on whether we should act is harder than ever. And that’s exactly where we need the most help.

The Future of Work and Play

So, where does that leave us?

Despite the hype and the fear, AI’s true value isn’t in mindless automation. It’s in reducing the cost of communication which can aid experimentation or innovation. Creativity thrives in environments where failure isn’t expensive. If AI can help us iterate faster, make mistakes more cheaply, and explore more ideas, then maybe it’s not about replacing humans — it’s about expanding human potential as most of the AI CEOs claim.

Work could become more like play — where AI sets the stage, presents challenges, and rewards problem-solving.

If AI reshapes the workplace, it won’t just change productivity — it will redefine what we value. But the real question isn’t how powerful AI becomes. It’s what kind of work we still want to do ourselves. Not to mention what aids and profits others and our communities.

If you are curious, here’s a way to explore the decisions needed to survive AI.

Soure: www.medium.com